regularization machine learning meaning

It is very important to understand regularization to train a good model. In addition there are cases where it is used to reduce the complexity of.

Regularization Techniques For Training Deep Neural Networks Ai Summer

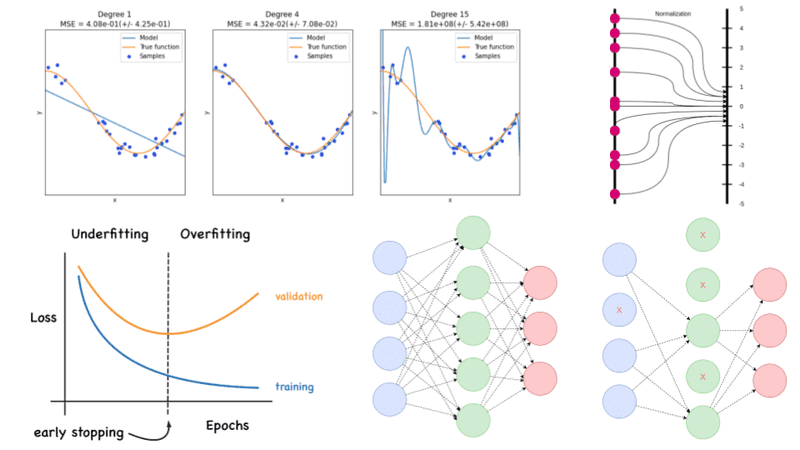

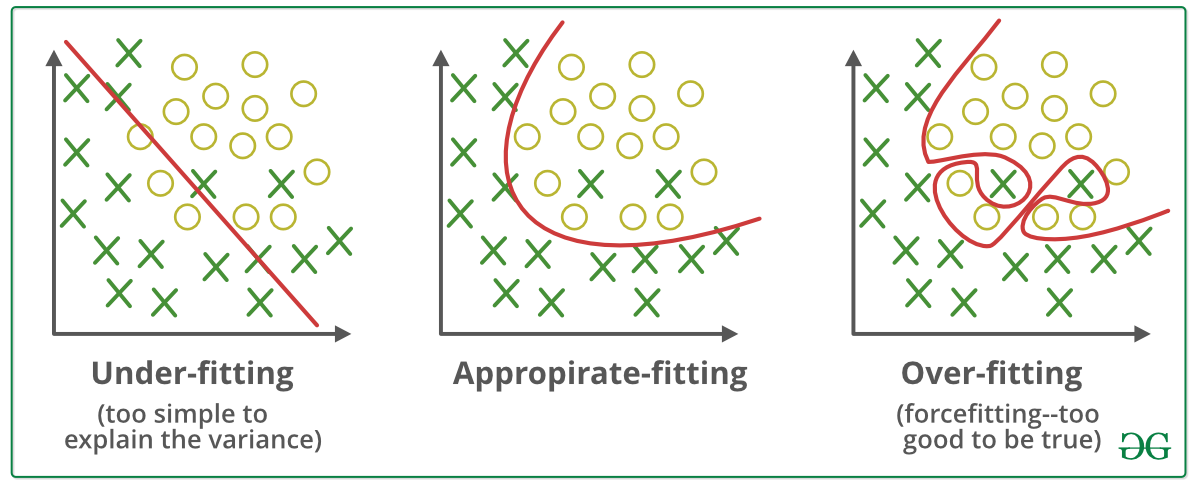

Both overfitting and underfitting are problems that ultimately cause poor predictions on new data.

. Regularization is a technique used to reduce the errors by fitting the function appropriately on the given training set and avoid overfitting. It is mainly used to prevent overfitting as well as to get results for the ill-posed problem sets. This is a type of regularized regression in Machine Learning in which the coefficient estimates are constrained regularized or shrunk towards zero.

This technique prevents the model from overfitting by adding extra information to it. When you overfeed the model with data that does not contain the capacity to handle it starts acting irregularly. A simple relation for linear regression looks like this.

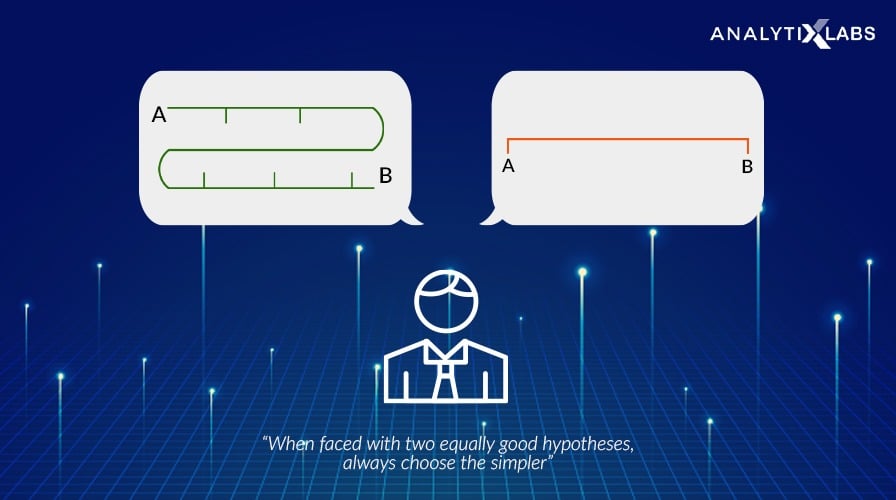

Regularization is one of the most important concepts of machine learning. Regularization is the process to simplify the resulted answer. Regularization This is a form of regression that constrains regularizes or shrinks the coefficient estimates towards zero.

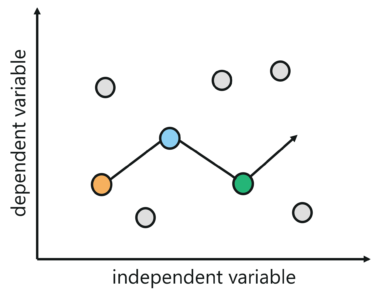

Regularization refers to techniques that are used to calibrate machine learning models in order to minimize the adjusted loss function and prevent overfitting or underfitting. Sometimes the machine learning model performs well with the training data but does not perform well with the test data. Regularization techniques are used to increase performance by preventing overfitting in the designed model.

In other words to avoid overfitting this strategy discourages learning a more complicated or flexible model. By the word unknown it means the data which the model has not seen yet. Produce better results on the test set.

Regularization refers to the modifications that can be made to a learning algorithm that helps to reduce this generalization error and not the training error. This penalty controls the model complexity - larger penalties equal simpler models. It is also considered a process of adding more information to resolve a complex issue and avoid over-fitting.

Regularization methods add additional constraints to do two things. Generally regularization means making things acceptable and regular. Regularization is a technique used for tuning the function by adding an additional penalty term in the error function.

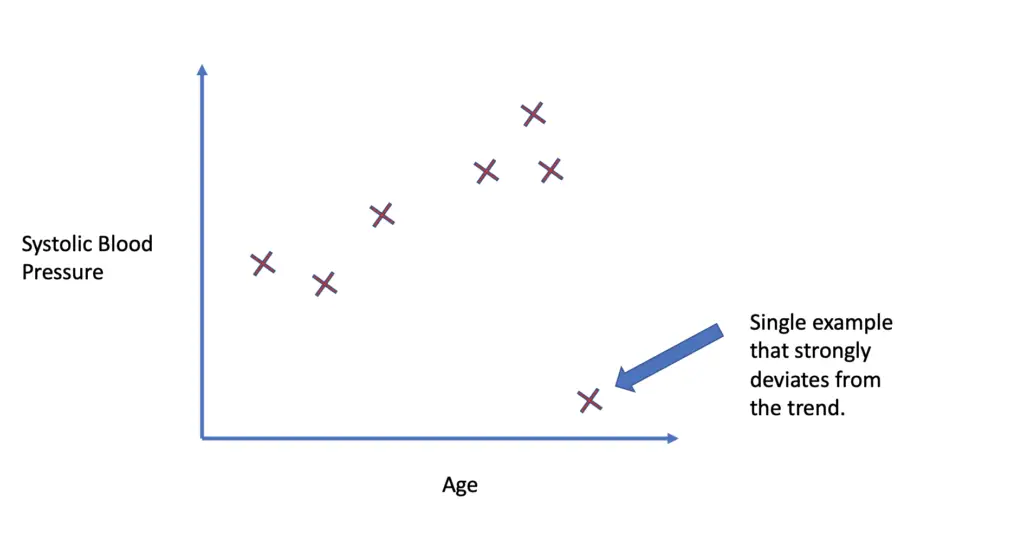

Solve an ill-posed problem a problem without a unique and stable solution Prevent model overfitting In machine learning regularization problems impose an additional penalty on the cost function. Over Fitting Overfitting is a common problem. Overfitting occurs when a machine learning model is tuned to learn the noise in the data rather than the patterns or trends in the data.

The regularization techniques prevent machine learning algorithms from overfitting. The process of regularization can be divided into many ways. Regularization is any supplementary technique that aims at making the model generalize better ie.

The commonly used regularization techniques are. It is one of the most important concepts of machine learning. Sometimes one resource is not enough to get you a good understanding of a concept.

Regularization is a technique that reduces error from a model by avoiding overfitting and training the model to function properly. Regularization is a method to balance overfitting and underfitting a model during training. Overfitting is a phenomenon that occurs when a Machine Learning model is constraint to training set and not able to perform well on unseen data.

Using regularization we are simplifying our model to an appropriate level such that it can generalize to unseen test data. The additional term controls the excessively fluctuating function such that the coefficients dont take extreme values. For understanding the concept of regularization and its link with Machine Learning we first need to understand why do we need regularization.

We all know Machine learning is about training a model with relevant data and using the model to predict unknown data. In other terms regularization means the discouragement of learning a more complex or more flexible machine learning model to prevent overfitting. It means the model is not able to predict the output when.

Regularization refers to the collection of techniques used to tune machine learning models by minimizing an adjusted loss function to prevent overfitting. It reduces by ignoring the less important features. In other words this technique discourages learning a more complex or flexible model so as to avoid the risk of overfitting.

One such way is delineation which subdivides regularization as Implicit regularization and Explicit Regularization. It is a technique to prevent the model from overfitting by adding extra information to it. In simple words regularization discourages learning a more complex or flexible model to.

What is Regularization in Machine Learning. What is Regularization. The ways to go about it can be different can be measuring a loss function and then iterating over.

This is exactly why we use it for applied machine learning. It also helps prevent overfitting making the model more robust and decreasing the complexity of a model. It is possible to avoid overfitting in the existing model by adding a penalizing term in the cost function that gives a higher penalty to the complex curves.

Regularization applies mainly to the objective functions in problematic optimization. Regularization helps us predict a Model which helps us tackle the Bias of the training data. In the context of machine learning regularization is the process which regularizes or shrinks the coefficients towards zero.

Regularization reduces the model variance without any substantial increase in bias. Regularization means making things acceptable or regular. Regularization on an over-fitted model.

Regularization in Machine Learning is an important concept and it solves the overfitting problem. This might at first seem too general to be useful but the authors also provide a taxonomy to make sense of the wealth of regularization approaches that this definition encompasses. It is a form of regression that shrinks the coefficient estimates towards zero.

20 A recap of linear regression. Regularization is one of the techniques that is used to control overfitting in high flexibility models. While regularization is used with many different machine learning algorithms including deep neural networks in this article we use linear regression to explain regularization and its usage.

In general regularization means to make things regular or acceptable.

Regularization In Machine Learning Programmathically

Difference Between Bagging And Random Forest Machine Learning Learning Problems Supervised Machine Learning

Regularization In Machine Learning Simplilearn

Machine Learning For Humans Part 5 Reinforcement Learning Machine Learning Q Learning Learning

Regularization In Machine Learning Simplilearn

Regularization In Machine Learning Regularization In Java Edureka

Regularization In Machine Learning Programmathically

Tf Example Machine Learning Data Science Glossary Machine Learning Machine Learning Methods Data Science

Implementation Of Gradient Descent In Linear Regression Linear Regression Regression Data Science

A Simple Explanation Of Regularization In Machine Learning Nintyzeros

Regularization In Machine Learning Regularization In Java Edureka

Regularization Of Neural Networks Can Alleviate Overfitting In The Training Phase Current Regularization Methods Such As Dropou Networking Connection Dropout

Regularization In Machine Learning Geeksforgeeks

Regularization In Machine Learning Regularization In Java Edureka

What Is Regularization In Machine Learning Techniques Methods

L2 Vs L1 Regularization In Machine Learning Ridge And Lasso Regularization

What Is Regularization In Machine Learning

What Is Regularization In Machine Learning Techniques Methods